Coherence Federated Caching Configuration

With the recent 12.2.1 release of Oracle Coherence it’s became possible to get your cached data synchronized across multiple data centers using Federated Caching data grid functionality.

Unfortunately, Oracle doesn’t provide a clear documentation how to setup the synchronization, so let’s find out all required for proper data replication across your data centers.

Step-by-step

- Before you start

- Disable local storage

- Switch to Proxy model

- Change your cache type to Federated

- Define Federation participants

- Open required ACLs

Before you start

As it was already mentioned, the Federated Caching feature is available only with 12.2.1 Coherence version, and if you’re running on a one of previous versions, for cache synchronization you migt want to go with Push Replication open source solution of the Coherence Incubator, or consider some workarounds to get your cache replicated.

Disable local storage

If your application is a part of the Coherence cluster and it has local storage enabled, be ready the application will talk directly to another storage-enabled member on the other side. That means that it will be participating in the synchronization process.

To avoid that I would recommend to disable local storage for your application.

Switch to Proxy model

Actually, if you decided to disable local storage there is no much sense to have your application to be a part of the Coherence cluster. So, you might want to run it as Extend Client, which is connected to Proxy Service.

Here is a good how-to manual from Oracle how to do that.

Change your cache type to Federated

All your cluster’s participants (proxy and storage nodes) should have defined Federated Cache Scheme in your overridden coherence-config.xml. Nothing special, just follow steps mentioned in Defining Federated Cache Schemes

Define Federation participants

Your Coherence clusters from different data centers should be able to discover each other and then establish a communication (synchronization) process. For that purpose you have to specify at least one storage node from each cluster in the participant’s list in the tangosol-coherence-override.xml:

<federation-config>

<participants>

<participant>

<name>ClusterA</name>

<name-service-addresses>

<address>192.168.10.1</address>

<port>8080</port>

</name-service-addresses>

</participant>

<participant>

<name>ClusterB</name>

<name-service-addresses>

<address>192.168.20.2</address>

<port>8080</port>

</name-service-addresses>

</participant>

</participants>

</federation-config>This config file should be overridden for each cluster’s member (proxy and storage nodes).

For more details see Defining Federation Participants

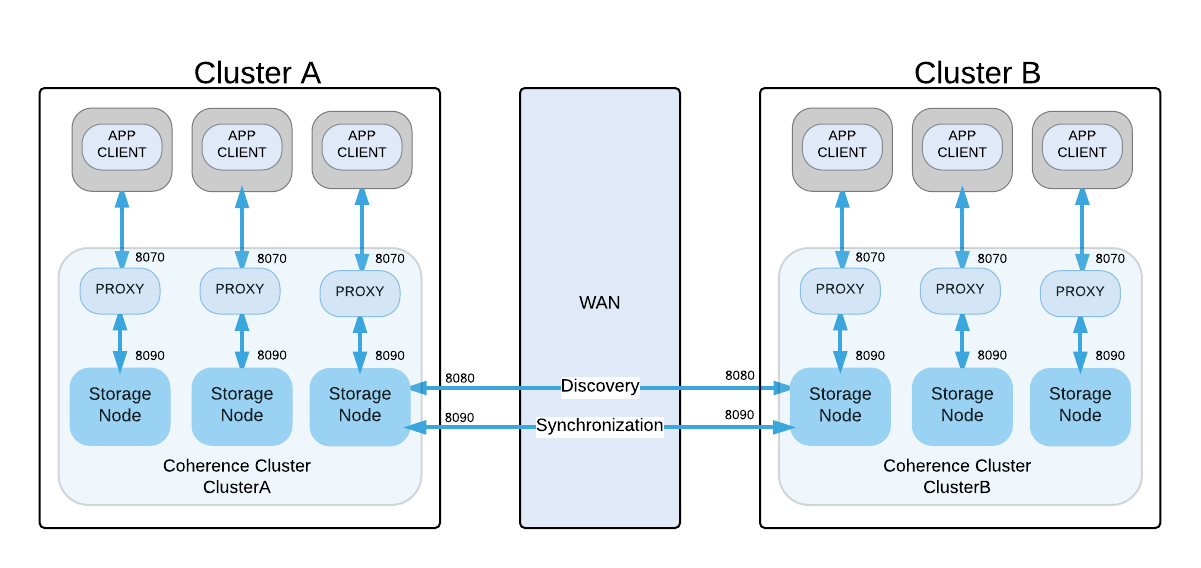

Open required ACLs

It might be not obvious, but apart from clusters’ ports, specified in participants’ list, it’s required to open localport of each storage-enabled member from both sides.

Basically, cluster ports are required for discover purpose, and localports - for synchronization process.

- 8070 - proxy ports (ACLs are not required)

- 8080 - cluster ports (ACLs are required)

- 8090 - localports (ACLs are required)